|

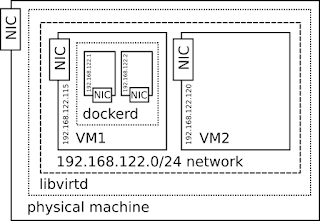

| schema of docker containers running in libvirt/KVM guest, all on one network |

Libvirt is just a default configuration with its default network.

On one of the guests I have installed Docker (on RHEL7 it is in rhel-7-server-extras-rpms repository) and changed it's configuration to use (to-be created) custom bridge:

[root@docker1 ~]# grep ^OPTIONS /etc/sysconfig/docker

OPTIONS='--selinux-enabled -b=bridge0'Now to create new bridge which will get "public" IP (in a scope of libvirt's network) assigned:[root@docker1 ~]# ip link set docker0 down # first bring it down [root@docker1 ~]# brctl delbr docker0 # delete it (brctl is in bridge-utils package)

[root@docker1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE="eth0"

BRIDGE="bridge0"

HWADDR="52:54:00:13:76:b5"

ONBOOT="yes"

[root@docker1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-bridge0

DEVICE=bridge0

TYPE=Bridge

BOOTPROTO=dhcp

ONBOOT=yes

DELAY=0

[root@docker1 ~]# service network restart

[root@docker1 ~]# service docker restart

No comments:

Post a Comment